Talks ∋ Do you want a flake with that?

Do you want a flake with that?

Hello!

I am here to talk to you about flaky tests.

I am an engineering manager at Cleo. We’re hiring, so talk to me after if that’s interesting. We are an AI coach for financial health.

Of interest today, I guess, is that we have a rails monolith, that we ship to multiple times a day, and flaky tests really get in the way of shipping because they stop you from … shipping, because CI is like “No! It’s red mate! You can’t.”

This is a talk I gave internally at a backend dev day, not January, but the previous January. Some of it might be Cleo specific, but I don’t really think it is.

Our context is, as I said, it’s a rails monolith that mostly has no UI, because we write APIs that are consumed by a react native app. The tests that I’m talking about are for the rails monolith – we don’t really have much in the way of full end to end, “touch the app and make it talk to the backend” style tests. We don’t have many of them, so most of what I’m talking about is unit style testing, not integration or system style testing.

We also use minitest, most of you probably use RSpec. The techniques are not really that different, but that does provide some context.

And we probably use some gems to help us that you don’t. But again, I think the techniques are generally applicable.

A guide

So what are we going to cover?

- We’re going to talk about understanding. Why do flaky tests happen in the first place?

- We’re going to talk about avoiding them. How can we recognise where we might be putting a flaky test into place and what we can do to mitigate that before it even runs on CI.

- And then last, we’ll talk about how to resolve them. Okay, CI has broken, and it’s a flaky test. How do we resolve it? How do we go from red, sad, flaky to green?

Understanding

First up: understanding. Why do flaky tests happen?

Tests want to be in isolation

My central thesis is that tests work best in isolation. Now, Fred’s just given us an amazing talk, using an extended metaphor about Chernobyl, and I am also going to try an amazing metaphor. So hold on to your hats.

Tests want to be in isolation - a concert metaphor (part 1)

If you think of a test suite like a concert then each individual test is one of these people in the audience, enjoying their their night out.

Going to a concert is great, but …

- someone might spill beer on you

- or they might talk throughout all the quiet songs

- or they might jump on your feet moshing to the fast songs,

That’s not enjoyable. That’s ruining your night. You’re there to enjoy the music (the running of the test suite), but someone has spilled beer on you (created a flaky test).

That’s not great.

Tests want to be in isolation - a concert metaphor (part 2)

However…

What if your test suite was more like this Covid era Flaming Lips gig where every audience member is contained safely in a lovely Zorb and cannot be influenced or interrupted by any of the other audience members? You can all enjoy this lovely night out without having beer spilled on you, unless you spilled beer on you yourself, but that’s okay, because that’s you. That’s your test doing that. That’s fine.

So yes, a lot more effort and maybe not quite as much fun, but everyone gets a safe night out.

Cool.

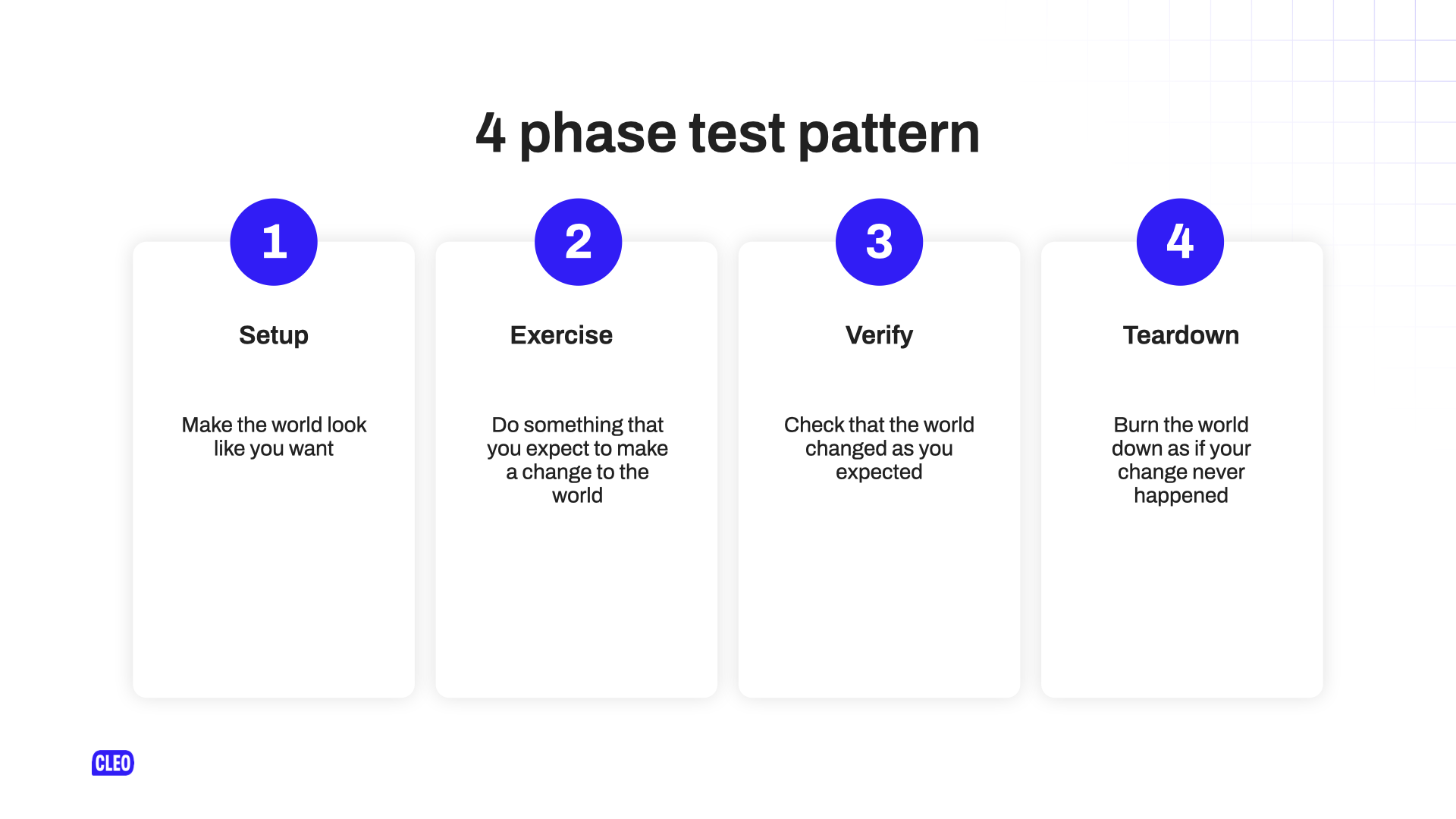

The 4 phase test pattern

My central thesis is that tests work best in isolation. Most tests achieve this using the 4 phase testing pattern. How this works is that when you run a test:

- First you set it up: you make the world look like you want it to.

- Next you exercise: you do something that you expect to change the world.

- Then you verify: you check that the change you applied has mutated the world in the way you expected.

- And finally, you tear down: you burn the world down, reset it back to as if nothing had ever happened. Nothing in your setup. Nothing in your exercise. You probably shouldn’t change the world in your verify, but if you did, nothing that happened there has happened, and your world is back to step 0.

Isolation is breached!

The problem is when everything that you hoped was isolated in those 4 stages is not true. Something else happened!

- When you set up the world, it turns out it didn’t actually look how you expected.

- When you exercised in the world, the change didn’t apply in the way that you expected.

- Verification then fails, obviously, because what you expected to happen … didn’t.

- Maybe when you tore things down it didn’t reset the world.

Something has happened, and isolation has been breached.

And that’s my central thesis.

Avoiding

Now we know what flaky tests are, now we’ll talk about how we might avoid them.

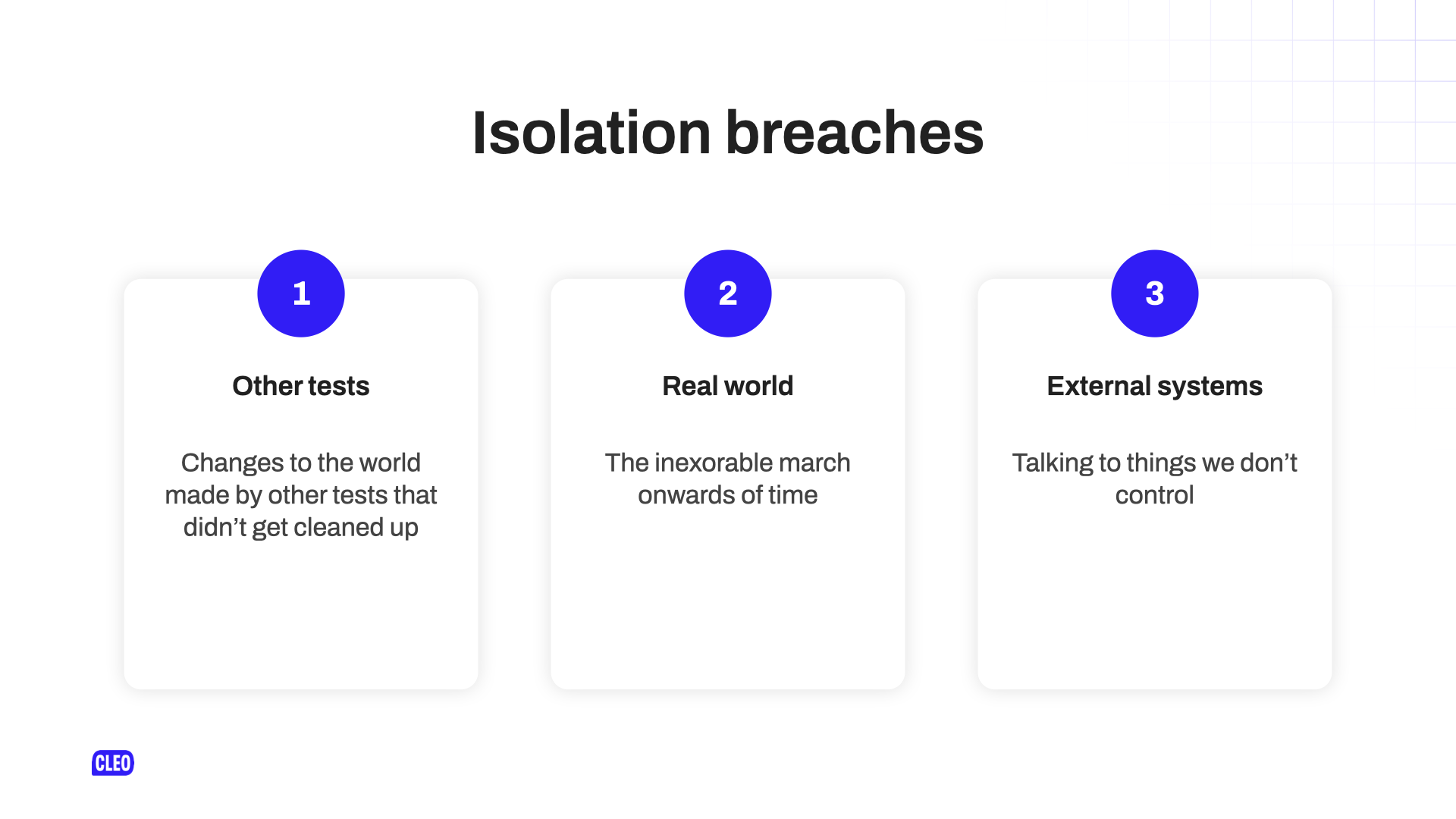

Isolation breaches

I’ve got 3 possible isolation breaches that we’re going to talk about:

- The first one is other tests: other tests have done something that hasn’t cleaned up the world and has left it in a weird state, and that is impacting us.

- The second problem is the real world: our tests have to interact with the real world, and mostly I’m just going to moan about time.

- The third one is that we have to talk to external systems: we maybe don’t control them so we can’t set them up in our world exactly as we want them to be.

Other tests - Intro

First up: other tests.

Things that one test does in the setup or exercise, or maybe verify or tear down, that just doesn’t get cleaned up. So when our test runs it’s not clear… it’s not…

The world’s not set up the way we expected.

So let’s go through some examples.

Other tests - changes to the database

If you write to the database in your test, and you don’t undo that change, the next test that’s running might break, because you expect there to be a single transaction in your database.

That’s a poor choice of words. We at Cleo work in finance so we care about transactions meaning moving money around. But also, I’m trying to talk about database transactions. Cool.

So in one test you write a … blog post … to the database. In your next test you expect there to be no blog posts in the database, and you write a blog post to the database, and then you say, “Hey, this should now have meant there’s only one blog post in the database”, but because you didn’t clean up the first one there are, in fact, 2 blog posts in the database, and your test fails.

So obviously, we can kind of fix that with things that come out of the box with a rails app like wrapping everything in a transaction: all your tests run in a transaction, then writes into the database are not impacted between test one and test two.

You could use the database cleaner gem to give you a bit more fine-grained control over how you deal with the database. You may want a completely empty database, or you might want to use fixtures or factories to completely isolate the world and say, “I care about this shape of data being in at the start of my world setup, and I’ll use transactions and database cleaner to make sure that that is true at the start of every single test”.

Other tests - changes to environment variables

Something else that might be problematic is that you might be testing the behaviour of your system depending on some environment variables. You might set the environment variable in one test, but don’t unset it. Your next test doesn’t expect the environment variable to be set to, for example, run all of these A/B tests, but your system under test says, “Oh, right, the environment variable is set to run all these A/B tests”, and so when your test runs, the system behaves differently to how it expected it to, and the test fails.

We can fix that by stubbing ENV or stubbing the things that act based on ENV to say, “I don’t care what you say, but this is how you’re supposed to behave right now”.

There is a gem called climate control that lets you effectively handle stubbing of the environment. You use a block to say, “Oh, set the environment to this, run the code, then unset it.”

Those are some things you can do to avoid flaky tests by controlling how and when you make changes to the environment.

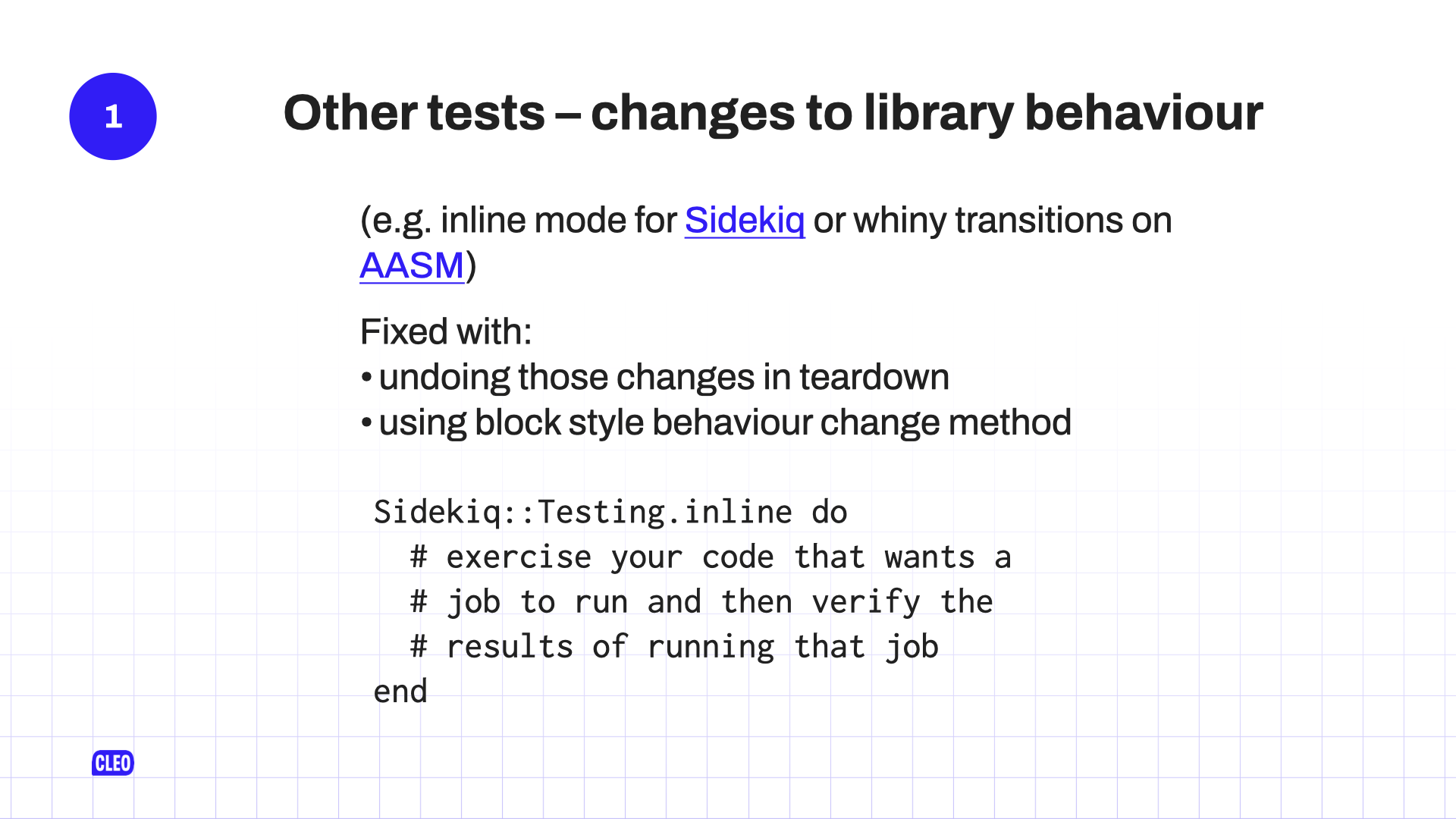

Other tests - changes to library behaviour

Sometimes you might want to change how a underlying library behaves. So, for example, we use sidekiq (secretly, our app is a sidekiq monolith, not a rails monolith).

It has different ways of running. In testing mode you can set it to “inline” mode which means when we push a job to the background, actually run it, versus, I think the default mode is to not do that and do something else. I think maybe just record that a job has been pushed to the queue. So you can tell sidekiq, “hey? Can you run in this mode, please?”

If you do that in one test and don’t undo it, then your next test may not expect that, and you’ll get failures because the job has or hasn’t run, depending on what you really wanted.

Probably lots of your other libraries have similar modes like this. We use AASM, which is “Acts As State Machine”. It has a mode where you can turn on throwing exceptions if you do a transition with an event that you’re not really allowed, or it can be told to not do that. You may want to turn it on or off in your test, depending on what you’re doing. If you forget to undo that? Problems.

You should make sure you undo those changes in your teardown, or you can see if your library provides helpful block methods that you can put in an around wrapper that will run around all of your test cases to change the mode for the tests where you actually care about that behaviour.

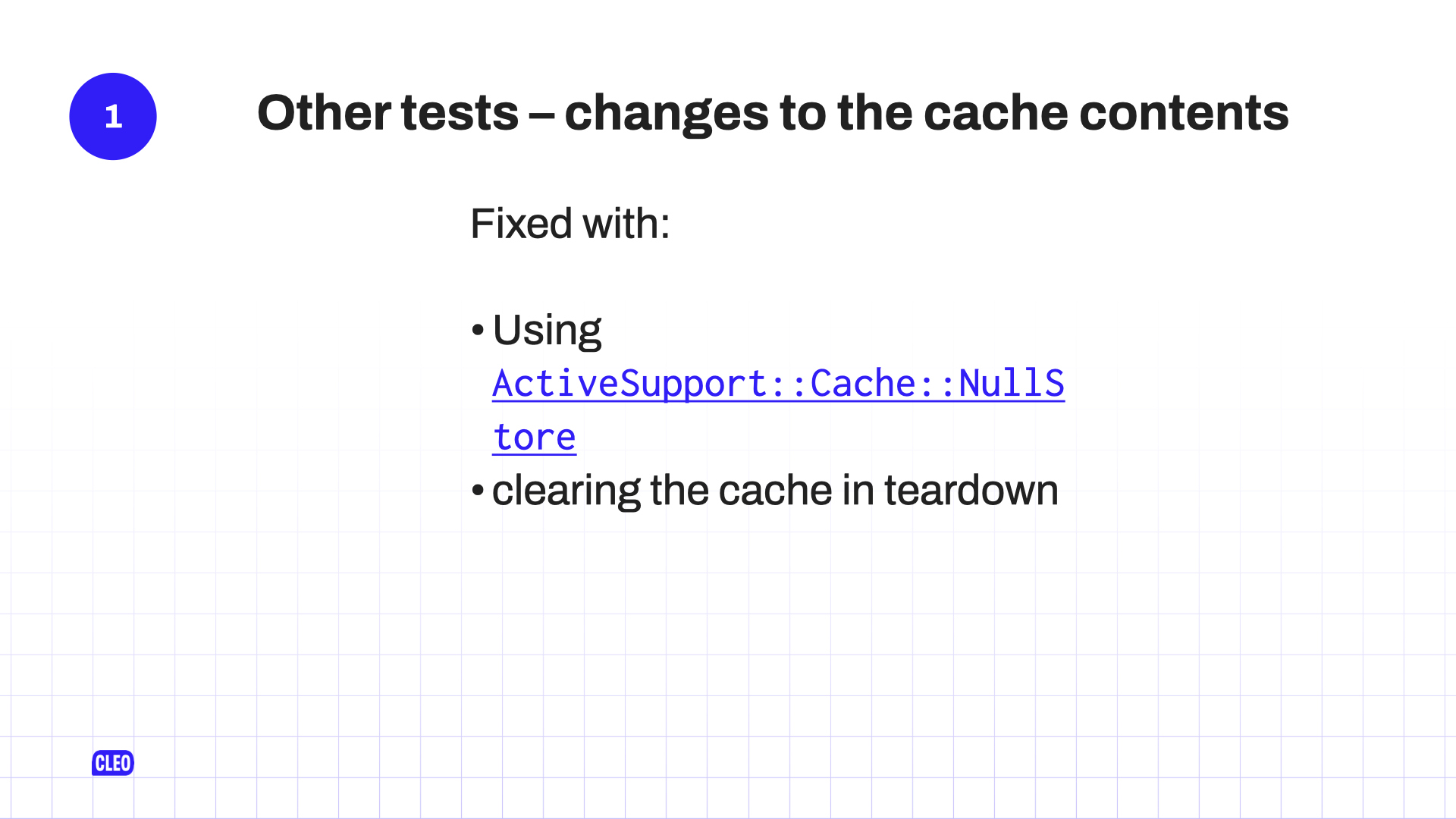

Other tests - changes to the cache contents

Similarly, you may have a system that uses some kind of caching. If one test puts something into the cache, your next test may behave differently, based on what is in that cache, so you can do a bunch of things there:

You could use the ActiveSupport::Cache::NullStore, which means you have a cache, but it only stores things for the duration of the block. It doesn’t store them outside of that block.

Or …

You can make sure to always empty your caches in your teardowns.

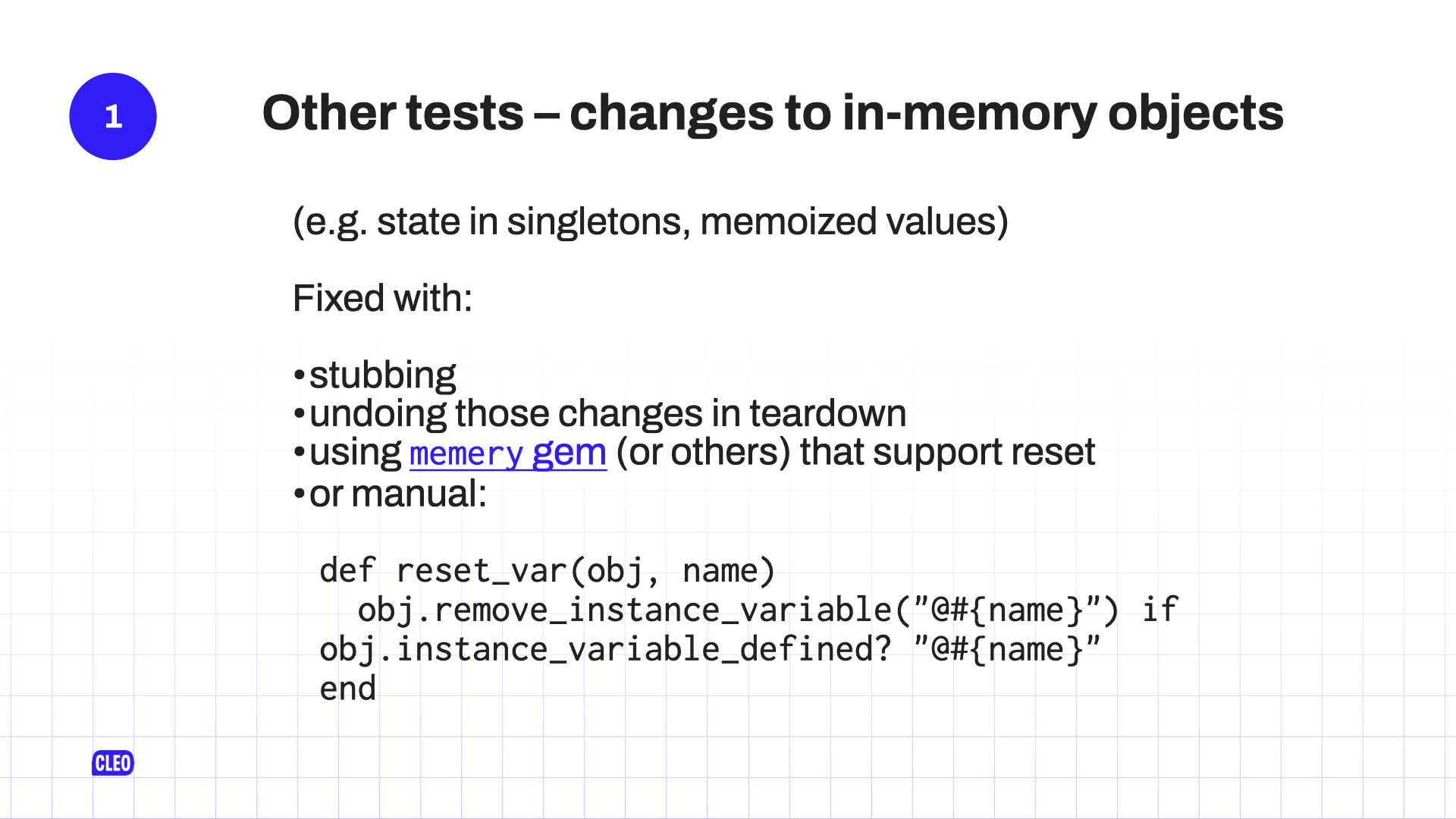

Other tests - changes to in-memory objects

And similarly, maybe you’ve got some in-memory objects like Singletons or memoized values on your objects that if you set it in one of your tests, and then don’t remember to unset it, in your next test the system behaves in a way that you don’t expect it to because of that.

So, again, we fix this by stubbing the object to make it behave in your test, regardless of the implementation or any stored data, exactly how you want it. Or … you undo those memoized changes in teardown.

Maybe you are smart, and you use a gem like memery or memoist, or any number of other things for doing memoization. It will probably have some function to clear the memoised cache of your object. Make sure you call that in your teardowns.

Or do something awful yourself and create a method for resetting these variables like the reset_var defined here, where you assume that your variables all have the right name, and they’ve been stored in an instance variable. And you just reach in and kick them out in your own teardown method.

Sometimes all of the things I’ve mentioned might combine in weird ways, such as: I have an environment variable and I’ve stubbed that so ENV is some value for the duration of this test and I’ve reset ENV after. But… maybe I’m memoizing the value of ENV in some Singleton object, so when I stub an ENV change here, I’ve forgotten to stub the memoization of the ENV value in some other part of the system. You’ve got to think about how these might combine in fun and annoying ways.

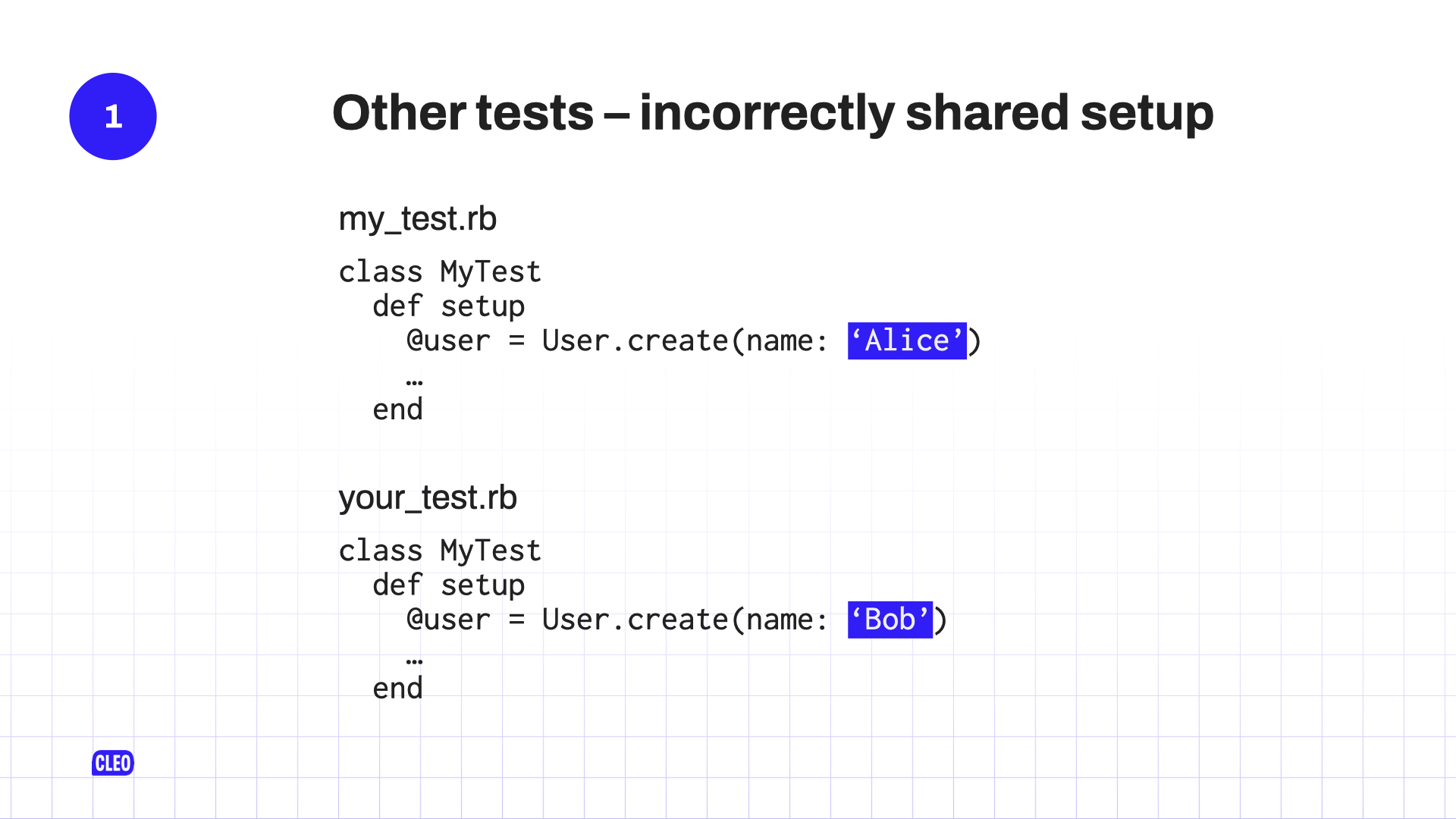

Other tests - incorrectly shared setup (part 1)

This one might not apply if you are using RSpec.

But basically as dev, sometimes we’re kinda lazy. I think there’s a quote that says lazy devs are good devs. So this is fine.

Sometimes when we’re writing a new feature we copy and paste the test from another feature and then tweak it. The thing that we forget to do is: we forget to change the name of the test class. It might live in a file called your_test.rb, but we copy and pasted it from my_test.rb and their setup methods are very unlikely to be exactly the same and work for both of them.

Depending on the order that these tests get run, my_test.rb might fail because it’s expecting “Alice” in the user’s name, but it got “Bob”, or your_test.rb may fail because it’s expecting “Bob” and got “Alice”. Or maybe your_test.rb doesn’t care what the value of the user’s name is, so only when your_test.rb runs after my_test.rb does it actually cause a problem.1

Other tests - incorrectly shared setup (part 2)

We fixed this after I spotted it a bunch of times in our code by running the rubocop Naming/FileName cop on our test files, which we normally don’t do, because who cares about the names of your tests? Turns out we do.

We run that and it complains when the file name and the class name don’t match. It’s a bit annoying, because then you have to make your class names and file names match in all the tests, and sometimes that’s not what you actually want, because the file name is a placeholder for a bunch of more helpfully named tests. But you have to go through it and you solve it, and then you no longer have that problem.

Real world - Time

Okay, so that’s other tests. The tl;dr of which is:

- do stuff in your

setup - make sure you undo it in your

teardown

Do that and you’ll be fine.

The next thing to talk about is the real world.

As a person who exists and is getting older: I hate time. As a programmer: I really hate time.

Time is … time is weird. There are calendars, and business days, and leap days, and leap seconds, and time zones, and date math, and date manipulation, and Gregorian versus Julian calendars, and … I hate it all so much because I’m a programmer, and I. Just. Hate. It.

Any programming that has to deal with time, or worse, dates, or both is a nightmare. If you’ve ever done anything with calendars in your product, you will know what I mean, and tests are no exception to this.

Real world - Avoiding Time (part 1)

Even if our tests are fast, time does march inexorably onwards.

That means that sometimes the test will run such that it crosses a second, or it crosses an hour, or it crosses a day, or a month, or something like that, which means what you thought was true about time at the start of the test is now different to the end of the test.

So you compare times and annoyingly when you say “oh, expect that it’s today”, it’s not today anymore, because you ran it at midnight. Haha! Joke’s on you.

There are lots of solutions to this where you use the timecop gem, or you use the time helpers that come with ActiveSupport to say “Time no longer moves! Freeze time!” and then your test doesn’t have to deal with time moving, and that is weird, but it will stop some functionality of your tests failing because you’ve skipped over a boundary that you didn’t expect.

Real world - Avoiding Time (part 2)

Another reason to try and avoid time is because, like I said, it’s weird: leap seconds, month durations, working days, daylight savings time. All of that stuff is super annoying.

You might want to run a test that is fine 200 days of the year, but if you run it on, say:

- the 1st of the month – it breaks,

- or if you run it on the 31st of the month – it breaks

- or if you run it on a Friday – it breaks because the test assumes the next business day is tomorrow,

- or if you assume that when you add 30 days to today you’ll always be in the same month

- or any of the weird stuff that might happen, because you forgot about how awful time is

So again, we might want to freeze time, but to a specific date and time that means you’re not going to have these problems when you run your test.

Now, we’re all nerds, we’re all pedantic, we’re think: “yeah, but now you haven’t tested all of the conditions”. And, yeah, well… go and write specific conditions for your time functions so you can say:

- “I’ve got a test for what happens on the first of the month.”

- “I’ve got a test for what happens on the third Friday of a February that has a 29th in it.”

Write specific test cases for those weird edge cases, because that’s what tests are for! It’s not running it on third Friday of February that is when you want to find out that your system doesn’t work on the third Friday of February, etc.2

Real world - Random

The other thing about the real world is randomness. There are many reasons that we might want to involve random elements in our applications.

- maybe for generating secure passwords

- maybe for generating user ids

- maybe our website is a D&D application and we want to roll d20s for people.

There are lots of reasons we want random elements in our production systems. But we don’t want random elements in our tests.

For example, if you have a factory and you put random names into user profiles, at some point, one of your names may breach some guidelines you have from a third party about how long names are allowed to be. And we all know, we’ve all read that website. We know that there are lots of things we don’t agree about, understand, or know about names, but turns out your third parties haven’t read that website, and they think names can only be 30 characters long! Apparently.

If you have a random element in your factory, at some point, you’ll get random data in your test, and your test will be like, “oh, I didn’t expect it to be this long or not that long”, and it will fail. So don’t use random elements at all.

Or, if you must use random elements, like for your d20 roller, seed it. Seeding the value of the random number generator in your test means it always uses the same random number generation – when you roll it the first time, it always gives you a 1, and when you roll a second time it always gives you a 5. Then your tests will never fail, because you’ve said “random number generator, always generate me this number”. Or, at least, they’ll be consistent.

Or we can stub the service to always return the exact number we care about, or the exact random element that we care about and this means we avoid the issues of random stuff happening in our tests.

And just to belabour the point, really, really don’t use random elements in your factories. Friends don’t let friends use the faker gem in their factories.

External Systems

So the other thing we have to worry about is external systems. Unfortunately, we do have to interact with things completely outside of our control. So other web servers and things that we just can’t control.

How do we deal with that?

External systems - network calls

The most obvious one is, we have to make some network calls to a service like we’re gonna download a file from S3. 99% of the time that’s going to be fine. But that 1% of the time is, no doubt, when you’re trying to ship an important thing to production and S3 has gone down. And that’s maybe why you’re trying to ship an important thing to production. And yet your tests fail because they’re talking to S3. Don’t do that.

We can stub that and close down the whole network. The classic things that you might have heard of are webmock, or VCR. Basically, they sit on top of all http communication that your ruby app will make and say, “No!”

With webmock you manually stub requests, with VCR you point at some horrifying YAML files or JSON files, and it says, “use them”. They both do the job, which is to say, “you can’t talk to the Internet, talk to these instead.”

I said that we’re not doing any end-to-end browser testing or things like that, but I have in the past written apps that did that. They failed because our browser was trying to download Google analytics scripts and the browser would fail, and then the test would fail.

There’s a neat little gem called Puffing Billy with a cool steam engine as a logo. It’s basically a proxy that you put in place of your like capybara, browser thing, or selenium browser, and basically say, “Nope, you’re not allowed to talk to the internet. You, browser, talk through me, and I only let you talk to local host or prescribed things”. You’ve put in place webmock for an actual browser. Useful if you’re doing that kind of testing.

I assume that not everything you do is talking over http, which is a real shame, because I don’t know the names of any gems that will help you stub non-http requests. But you could probably look at the webmock source and find and replace Net::Http with Net::Ftp, or Net::Ssh or … Net::Gopher. I don’t know? If you’re using gopher you’re on your own, I’m really sorry.

But yeah, like, stub out other network calls the same way that we stub everything else to make it consistent and make it clear.

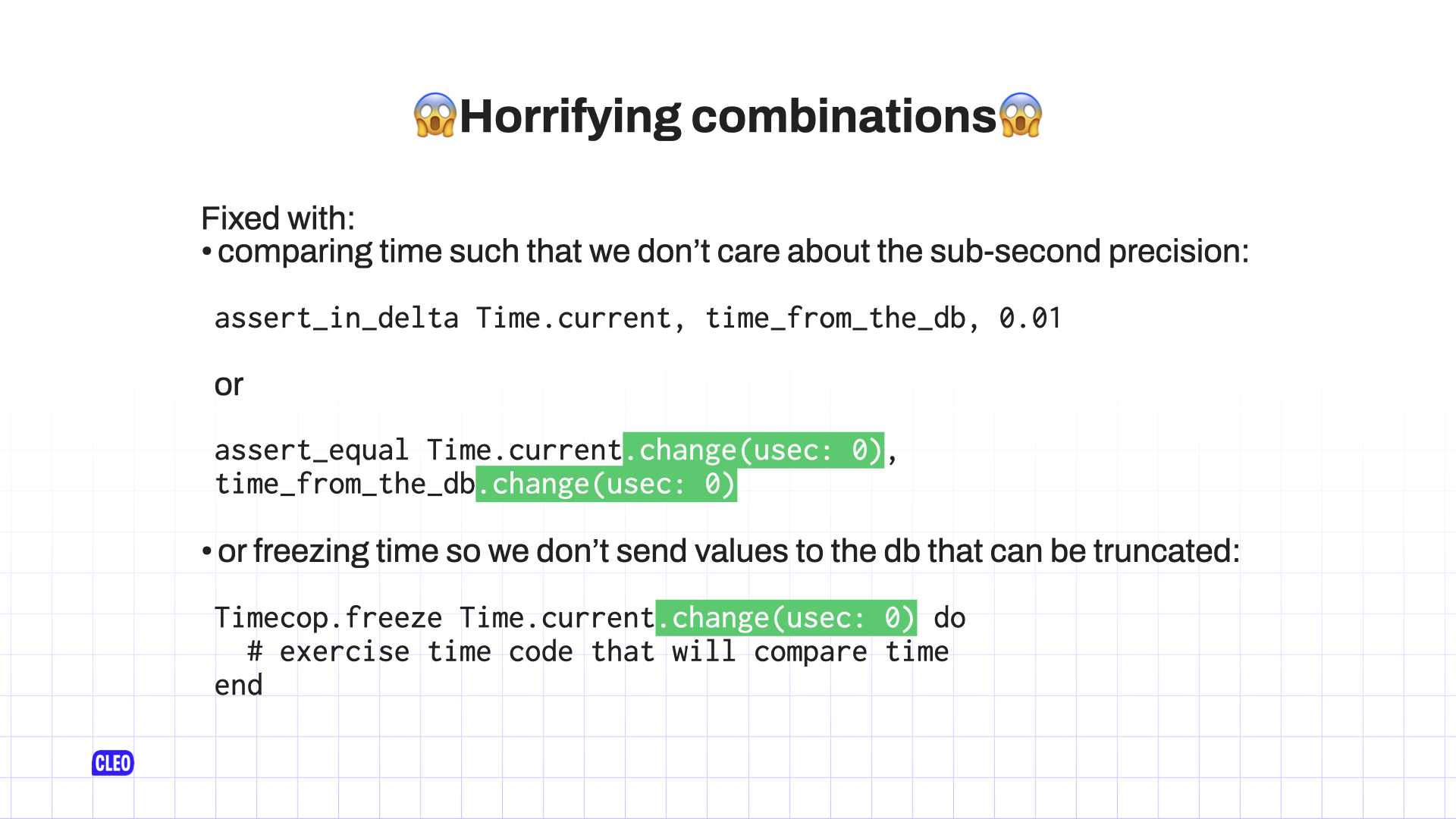

Horrifying combinations (part 1)

And, again, sometimes all of this stuff combines in truly horrifying and annoying ways.

Like a difference of opinion between how ruby and databases represent time. Our database is postgres and postgres is microsecond precise when you store data in it. Ruby time objects are nanosecond precise when you find out what the time is3.

Sometimes when you compare them, Ruby’s going to be like. “I expected ‘quarter to 8 on Monday, the 8th of April’, but I got ‘quarter to 8 on Monday, the 8th of April’”, and what has happened is it’s .001th of a second out, but it’s not gonna tell you that. But your test is going to fail.

The problem here is that you’ve extracted a time from Ruby, you’ve pushed it through a database roundtrip, and then you’ve compared them, and they’re not the same precision any more.

Horrifying combinations (part 2)

We can fix this by using assert_in_delta, which is a method that you get from minitest. Pretty sure that RSpec has something similar, probably called.

expect(time).to be_within(some).seconds_of(actual).

Pretty sure, Jon’s nodding4, so I think that’s right.

Or we can just ignore the microsecond precision. I’ll just say assert that this time and I don’t care how precise it is, is the same as this time and I don’t care how precise it is either.

Or we can freeze the time, so that when we’ve frozen the time, we freeze it to a time that is not microsecond precise, and then everything is not microsecond precise, and we don’t need to care about it.

Resolving

Those are some things that I have seen about how tests have been flaky in the past, in applications that I have written.

If we are thinking about those things when we’re writing the tests and we do all that good best practice stuff then we’re going to avoid any flaky tests.

But … the reality is it’s not going to solve the problem. Our test suite still has flaky tests, even though I gave this in January over a year ago and everyone does exactly what I say.

So how do we find and fix flaky tests when they happen?

Have you tried…

Fundamentally, this is my proposal.

That you, knowing everything I’ve just told you, you just look at the failing test, think really, really hard and hope you solve it.

And, really, that is what you’re going to have to do.

But, I’m not a complete asshole, I do have some tips.

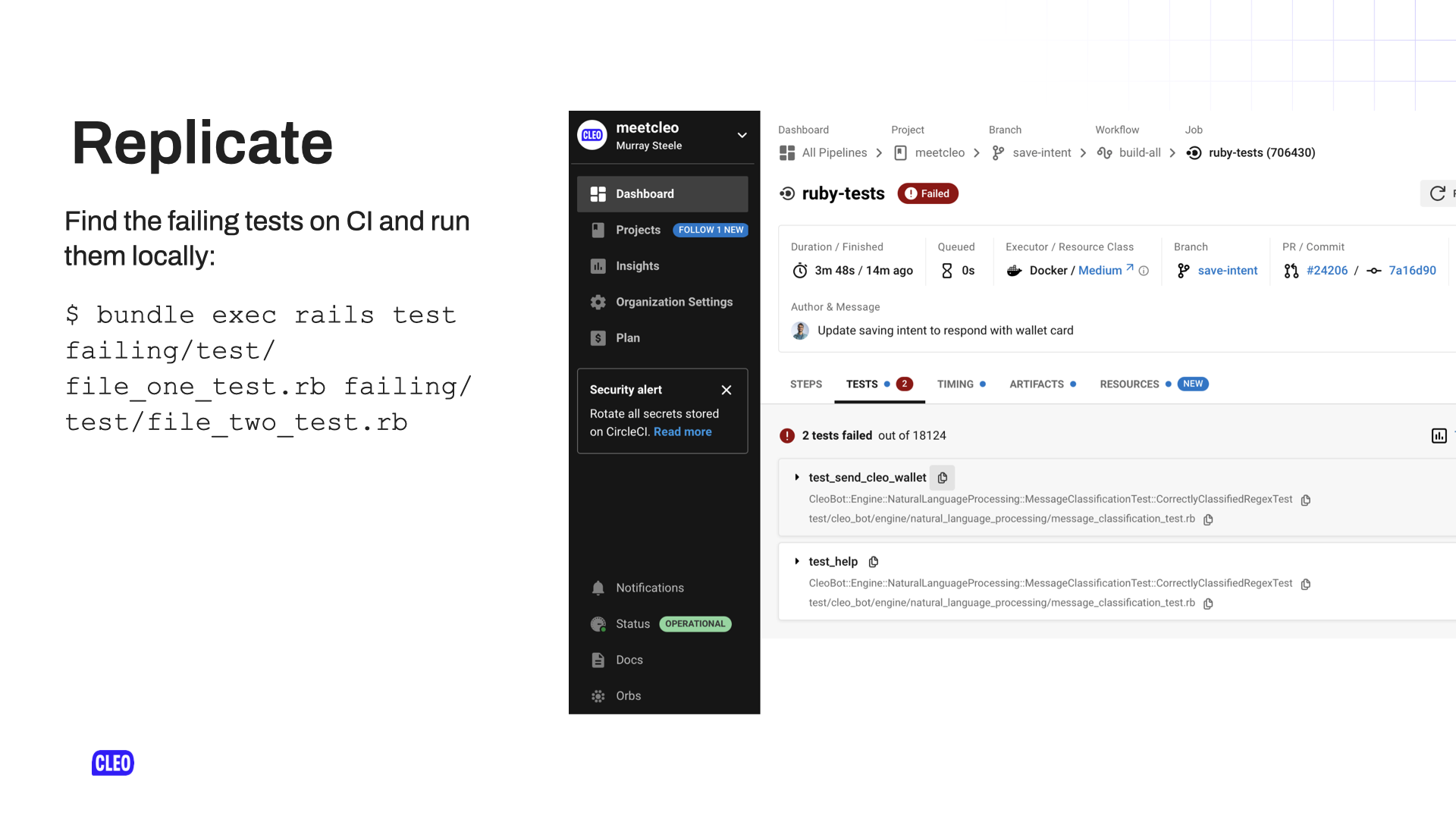

Replicate

The first thing you’re going to want to do is to replicate it. Chances are you’re not running the test suite and getting flaky failures on your local build, or, you don’t care about them because they’re not stopping you from deploying. It’s the flaky build on your CI platform. We use circle, you maybe use github actions or buildkite or something else I’ve not heard of. Whatever it is you use, it will tell you what test actually failed.

If you are lucky you just grab the name of the failing test and when you run it, it fails locally for you. But if that was the case, why did you commit it?

So we’re going to have to do something else.

Replicate v2

I mean, maybe you just run out a few times and it’ll fail. And you’re like, “Okay, cool”. But life’s not often like that.

Actually, what you’re going to want is the seed value. You have to run the whole suite, and you want the seed value to run it in the same random order that your test suite used when it flaked on CI.

Most test suites run in a random order. If your test suites are not running in a random order, congratulations, you’ve got rid of one whole category of problems with flaky tests. But you’ve probably given yourself some other category of flaky tests, which is that they’re dependent. That’s a different talk.

You’re going to get a seed value and you’re going to want to get the list of tests that actually ran.

Our test suite is, humble brag, pretty big, so we split it across 8 runners on CI, which means only an 8th of the test suite runs on every instance. So if I just tried to take the seed value and run the entire test suite, it wouldn’t run in the same random order as CI had run it. So I need to know the exact set of things that ran.

What I do is just go and copy and paste the list of tests and then paste them into a text file, turn that into a terminal command, then paste that into my terminal. My terminal doesn’t like that because it’s a massive command, but it does actually run.

Then you can replicate it locally with the same seed value as CI, and hopefully. you’ve got a reproducible test case. So now, if you’re like us you’ve only got an eighth of the test suite to reason about rather than all of the test suite. So thank you, and good night I have saved you seven 8ths of your life.

No, there’s more.

minitest-bisect

![Slide 31: minitest-bisect text: minitest-bisect; $ bundle add minitest-bisect; $ bundle exec minitest_bisect --seed [CI SEED VALUE] -Itest [LIST OF TEST FILES FROM CI]; ⏳Time passes⌛️;Final reproduction: Run options: --seed [CI SEED VALUE] -n](http://assets.h-lame.com/images/talks/do-you-want-a-flake-with-that/slides/031.png)

Once you have worked out what are the actual tests that CI ran, and you’ve got a failing case locally using a particular seed value and a particular set of tests, then you can feed that into minitest-bisect. There is an RSpec version of this, in fact, I think it’s baked in. So you don’t need a separate gem. RSpec thinking about user experience. Who would have thought?

So you feed all that into minitest-bisect:

- you pass in the seed value

- you pass in the list of test files

- you press return,

Then you go about your life for hours. Probably it is going to take some time, because what this is going to do first is – it’s going to run your tests to establish that it definitely fails. Then it’s going to do a binary search over the total number of tests that were run to work out what is the minimum reproducible case. And hopefully, what this means is you get down to:

- run this test,

- then this test,

- and then the one that you were told by CI is flaky will fail.

And that gets you from an 8th of the test suite to 2, maybe 3 tests. Depending on how slow an 8th of your test suite is, this could take some time. It’s the kind of thing to do towards the end of the day; you hit return and you come back to it in the morning. If you’re lucky it does actually give you a reproducible test case, because I am not going to lie, I have done this, gone away and come back like the next day, and it’s still like “nah mate, can’t sort this one”. But most of the time this will get you somewhere, and then…

Have you tried… (reprise)

…you can try just thinking really hard and hoping that you solve it because you’re looking at 2 test cases.

You’ve got one that’s running before yours that is presumably the smoking gun here. It did something that it didn’t clear up and your one is like, “why is the world not how I cared about? I’m failing!”

And so you do have to think about it really hard. But I’ve shared some ideas of what to look out for, and I’ve shared some tips to get to this point.

I think hopefully then you can solve the flaky tests and feel bad about committing them in the first place.

Elsewhere

Elsewhere, if this talk wasn’t enough:

- There was a really good talk at Rubyconf in November by Alan Ridlehoover called “The secret ingredient: How to understand and resolve just about any flaky test”. If you want more of this hot content, go watch that.

- There’s some more details on

minitest-bisect - And Rspec has bisect too. I put that in just for Jon.

Thanks for listening!

Thanks for listening!